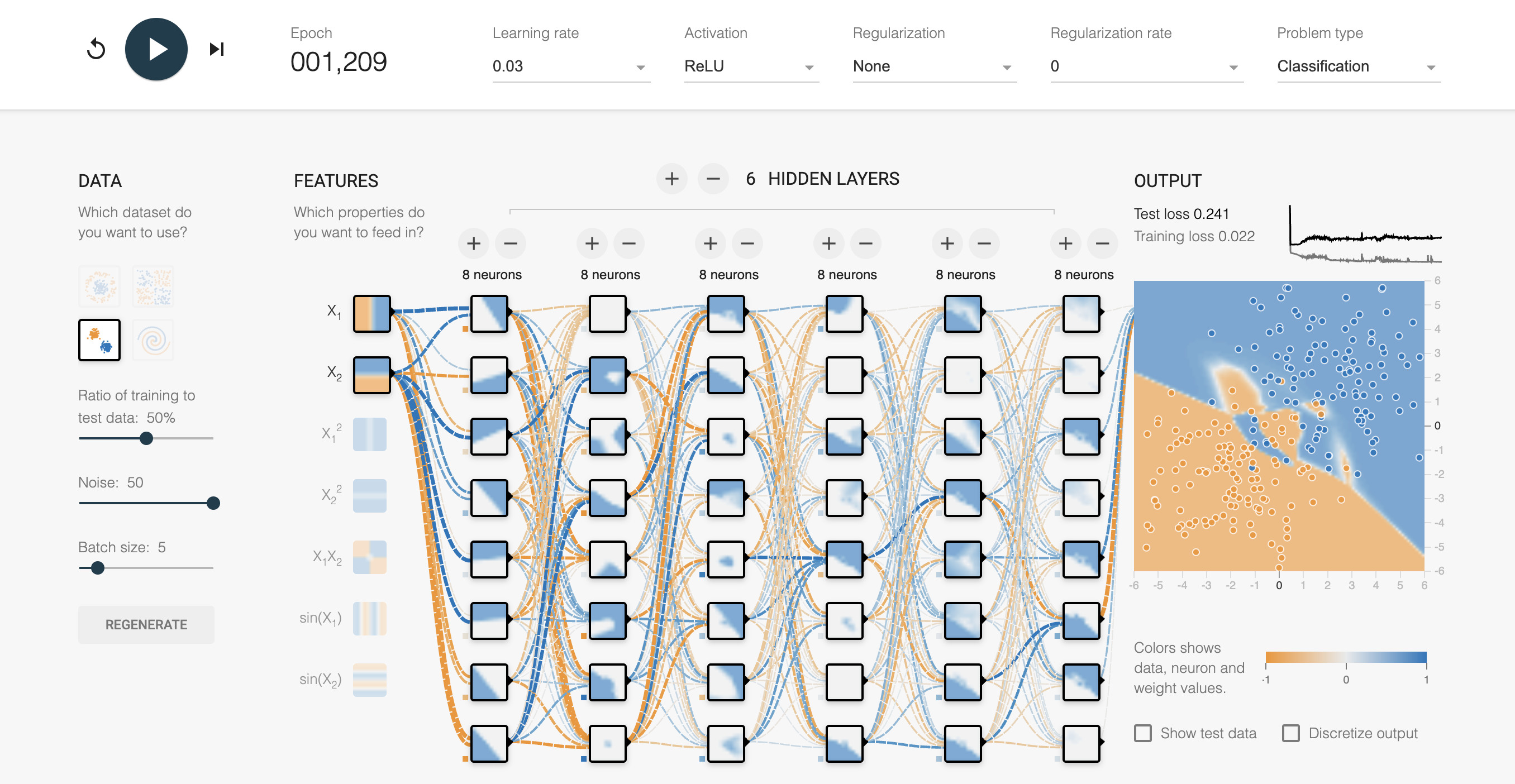

Exercise 5: Regularization in the Playground#

Given the ridiculously overfit network below,

add an L1 regularization term with a big enough coefficient to simplify the model,

do the same with an L2 regularization term.

Do you notice anything different in the resulting matrix weights (the lines between the layers)?

Notice the plot in the upper right:

What is it telling you? How does it change as you add regularization?