Overfitting Check

Overview

Teaching: 5 min

Exercises: 5 minQuestions

How do I check whether my model has overfitted?

Objectives

Determine whether your models are overfitted.

Is there any overfitting?

In this section we will check whether there has been any overfitting during the model training phase. As discussed in the lesson on Mathematical Foundations, overfitting can be an unwanted fly in the ointment, so it should be avoided!

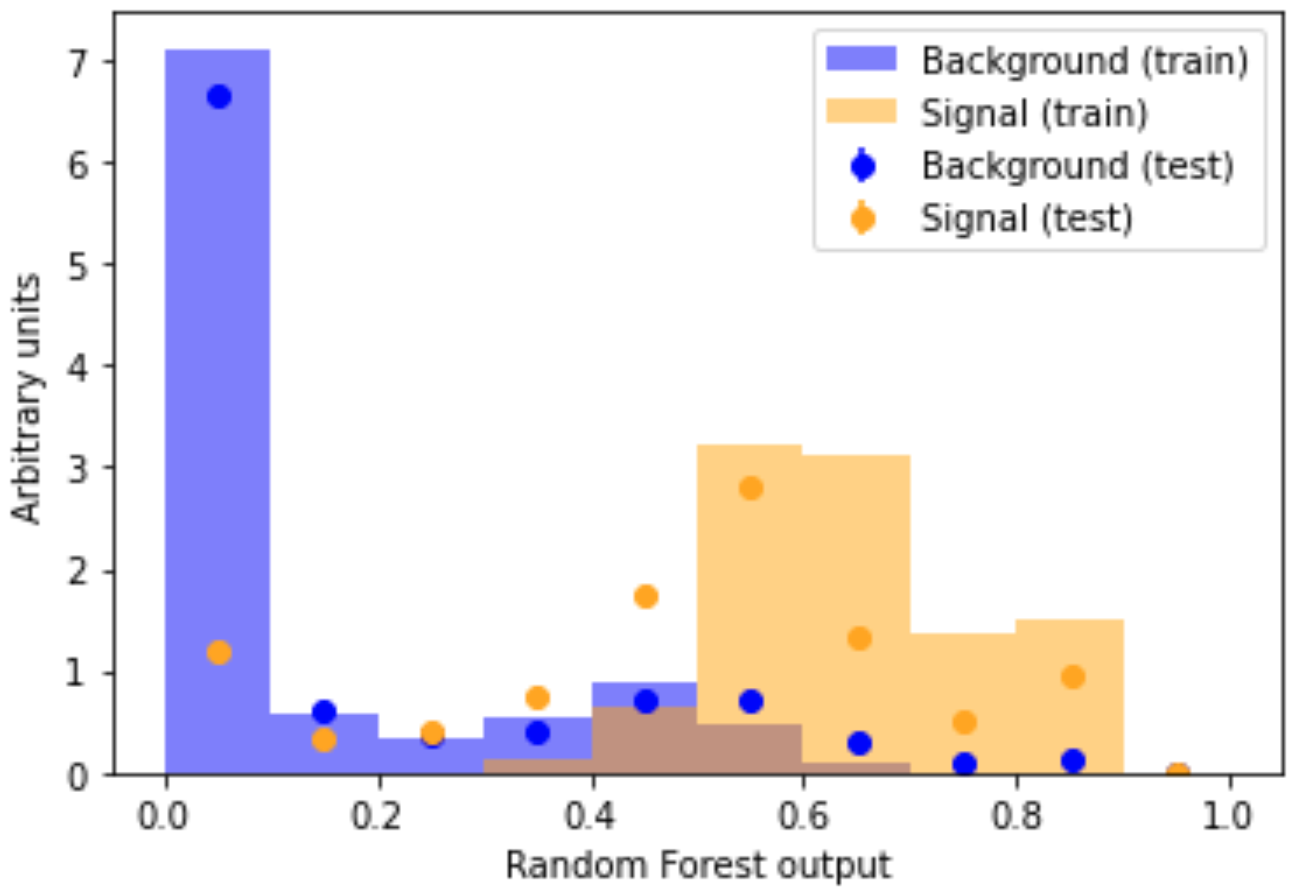

Comparing a machine learning model’s output distribution for the training and testing set is a popular way in High Energy Physics to check for overfitting. The compare_train_test() method will plot the shape of the machine learning model’s decision function for each class, as well as overlaying it with the decision function in the training set.

There are techniques to prevent overfitting.

The code to plot the overfitting check is a bit long, so once again you can see the function definition –>here<–

from my_functions import compare_train_test

compare_train_test(

RF_clf, X_train_scaled, y_train, X_test_scaled, y_test, "Random Forest output"

)

If overfitting were present, the dots (test set) would be very far from the bars (training set). Look back to the figure in the Overfitting section of the Mathematical Foundations lesson for a brief explanation. Overfitting might look something like this

As discussed in the Mathematical Foundations lesson, there are techniques to prevent overfitting. For instance, you could try reduce the number of parameters in your model, e.g. for a neural network reduce the number of neurons.

Our orange signal dots (test set) nicely overlap with our orange signal histogram bars (training set). The same goes for the blue background. This overlap indicates that no overtaining is present. Happy days!

Challenge

Make the same overfitting check for your neural network and decide whether any overfitting is present.

Solution

compare_train_test(NN_clf, X_train_scaled, y_train, X_test_scaled, y_test, 'Neural Network output')

Now that we’ve checked for overfitting we can go onto comparing our machine learning models!

Your feedback is very welcome! Most helpful for us is if you “Improve this page on GitHub”. If you prefer anonymous feedback, please fill this form.

Key Points

It’s a good idea to check your models for overfitting.